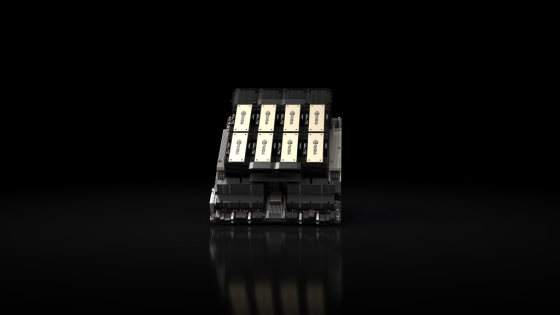

Nvidia HGX H200: Nvidia Upgrades Top-of-the-Line Chip for AI Work،

Nvidia, the leading maker of graphics processing units (GPUs), has revealed details of its latest high-performance chip for AI work, the HGX H200. This new GPU builds on the success of its predecessor, the H100, by introducing significant improvements in bandwidth and memory capacity to improve its ability to handle intensive generative AI work.

What is the difference between HGX H200 and H100?

The HGX H200 offers 1.4x more memory bandwidth and 1.8x more memory capacity than the H100, making it a notable advancement in the AI computing landscape. The main improvement is the adoption of a new, faster memory specification called HBM3e, raising the GPU’s memory bandwidth to an impressive 4.8 terabytes per second and increasing its total memory capacity to 141 GB.

The introduction of faster and larger High Bandwidth Memory (HBM) aims to accelerate the performance of computationally demanding tasks, particularly benefiting generative AI models and high-performance computing applications. Ian Buck, vice president of high-performance computing products at Nvidia, highlighted these advancements in a video presentation.

Despite technological advances, the question that arises revolves around the availability of new chips. Nvidia recognizes the supply constraints facing its predecessor, the H100, and aims to launch the first H200 chips in the second quarter of 2024. Nvidia is working with global system makers and cloud service providers to ensure availability, but Specific production figures are not disclosed.

The H200 remains compatible with systems supporting H100s, providing a seamless transition for cloud providers. Major players like Amazon, Google, Microsoft and Oracle are among the first to integrate the new GPUs into their offerings in the coming year.

Although Nvidia is refraining from disclosing the price of the H200, its predecessor, the H100, is estimated to cost between $25,000 and $40,000 each. Demand for these high-performance chips remains strong, with AI companies actively seeking them for efficient data processing in training generative image tools and large language models.

The unveiling of the H200 is part of Nvidia’s efforts to meet growing demand for its GPUs. The company plans to triple production of the H100 in 2024, with a goal of producing up to 2 million units, as reported in August. As the AI landscape continues to evolve, the introduction of the H200 promises enhanced capabilities, paving the way for a more promising year for GPU enthusiasts and AI developers.

You can watch Nvidia’s announcement:

You can follow Smartprix on Twitter, Facebook, Instagram and Google News. Visit smartprix.com for the latest news, reviews and technical guides.