What Is Google’s Gemini AI Model Capable Of? Five Interesting Use Cases Explored،

In the race to deploy the most advanced AI-based language model, OpenAI (and its largest investor, Microsoft) and Google show no signs of slowing down. Recently, OpenAI dropped the GPT-4 update, integrating several new capabilities such as data interpretation, image recognition, etc. Now, the Alphabet-owned tech giant has come up with its most advanced LLM, Gemini. That said, here are five exciting things Google's latest AI model can do.

What are Geminis capable of?

With advanced multimodality, Gemini can handle text, images, speech, code, videos, templates and much more. Google also claims that Gemini is its most flexible model yet because it can run efficiently on data centers with massive processing power on mobile devices with limited resources. Gemini 1.0, the first release, is optimized for three different use cases. These include the Gemini Nano for on-device tasks, the Gemini Pro for scaling a wide range of tasks on a workstation, and the Gemini Ultra for very complex tasks.

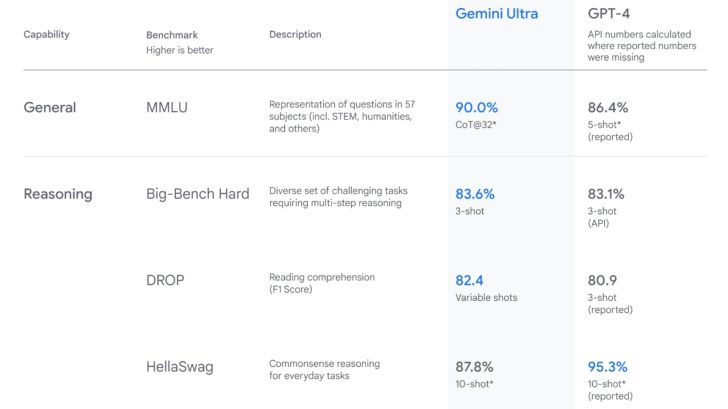

Gemini Ultra Vs. GPT-4: this is what the benchmarks say

According to Google, Gemini is the first model to surpass human experts in massive multitasking language understanding, as it includes 57 different subjects, including math, physics, law, medicine and more. Some benchmarks where Gemini Ultra beats OpenAI's GPT-4 include MMLU, Big-Bench Hard, DROP, GSM8K, AMTH, HumanEval, and Natural2Code. This implies that Gemini Ultra is better able to handle various tasks requiring multi-step reasoning, reading comprehension, basic arithmetic manipulations, difficult matching problems, and Python code generation.

Gemini can detect similarities and differences between two images

Google's multimodal AI model can find similarities between images. Gemini finds connection points between two rather complicated images in a demo video uploaded to the company's YouTube channel. It can identify that both have a curved, organic composition, implying that it understands what is drawn in the image and can cross-reference the inference with its database to generate an answer, all within seconds.

Gemini can explain reasoning and match in simple steps

Google shows how Gemini can understand formulas and steps written on handwritten paper and distinguish the good ones from the bad ones. In the demo, Gemini is asked to focus on one of these problems solved on a paper and determine the calculation error. Gemini is right and can even explain the mathematical or scientific concept behind the formula before performing the correct calculation. In this way, Gemini can be useful to students who struggle to solve tricky numerical problems in math or physics.

Gemini supports Python, Java, C++ and Go

Another demo video on Google's YouTube channel mentions how Gemini consistently solves 75% of 200 benchmarking programs (on the first try) on Python, compared to 45% on PaLM 2. Additionally, allowing Gemini to double-check and repairing its codes, the solution rate exceeds 90%, which indicates that the AI model can help coders remove errors from their programs and run them smoothly.

Gemini can recognize clothes

In another example, Google shows how Gemini can understand different clothing items and provide associated reasoning. Although Google hasn't covered this part, Gemini should also be able to offer outfit ideas based on color combinations and climate. For example, if someone asks what type of jeans or pants go with a down jacket, Gemini should be able to suggest some ideas. Likewise, Gemini can also identify what is happening in a video, whether someone is creating a drawing, performing a magic trick, or playing a movie.

Gemini can extract data from thousands of research papers in minutes

Typically, referencing a massive dataset can take months of manual reading and note-taking. However, Google shows how Gemini recognized research articles (from around 200,000) relevant to a study. Then, Gemini extracted the required information from the relevant documents and updated a particular dataset.

Gemini can also reason about numbers, such as charts and graphs, and create new ones with updated numbers. In this way, Google's new AI model can help scientists and academics obtain references and citations faster.

Pixel 8 Pro and Bard for a first taste

Although these demos were presented on a custom user interface, this implies that developers can use Gemini's advanced capabilities to create their AI-based tools. Google has already released Gemini Nano for Pixel 8 Pro, which received two new features including Summarize In Recorder and Smart Reply in Gboard. Google's AI chatbot, Bard, will also benefit from Gemini Pro's capabilities in the coming days.

You can follow Smartprix on Twitter, Facebook, Instagram and Google News. Visit smartprix.com for the latest news, reviews and technical guides.